Ryan and I were on This Week in Law today to discuss a variety of current legal topics. If you missed the live show you can watch the video online. Thanks to Evan Brown for a great show!

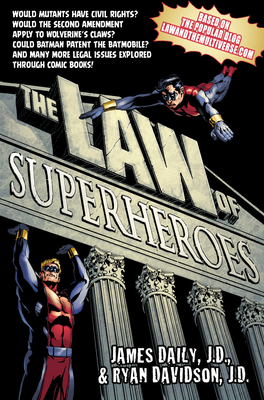

The Book

Our new book, The Law of Superheroes, is now available! Order your copy today!

Our new book, The Law of Superheroes, is now available! Order your copy today!Facebook

Email

Send in your post ideas, questions, and comments:

James DailyLaw and the Multiverse CLE

On-demand CLE courses from Law and the Multiverse, presented by Thomson West:

What Superheroes and Comic Books Can Teach Us About Constitutional Law

Real-Life Superheroes in the World of Criminal Law

Everyday Ethics from Superhero Attorneys

Kapow! What Superheroes and Comic Books Can Teach Us About Torts

-

Recent Posts

- She-Hulk: Was Wong in the Wrong?

- Conflict Experts Fight About Star Wars

- Lawyers Club of San Diego Virtual Happy Hour

- Wanda’s Liabilities for the Westview Anomaly

- Law and Popular Culture Online Panel

- Law and the Multiverse Retcon #11

- Awesome Con 2019

- Tax Avengers Assemble: The Impact of Tax Reform on Superheroes

- My Other Legal Writing

- The Mystery of Danny Rand’s Inheritance

Search

Teaching with Comics

Archives

- September 2022 (2)

- May 2021 (1)

- March 2021 (1)

- May 2020 (1)

- April 2020 (1)

- April 2019 (1)

- March 2018 (1)

- May 2017 (1)

- April 2017 (1)

- March 2017 (1)

- January 2017 (1)

- November 2016 (1)

- July 2016 (4)

- June 2016 (1)

- May 2016 (1)

- April 2016 (3)

- March 2016 (1)

- January 2016 (1)

- November 2015 (6)

- September 2015 (2)

- August 2015 (4)

- July 2015 (2)

- June 2015 (4)

- May 2015 (2)

- April 2015 (1)

- February 2015 (2)

- October 2014 (1)

- September 2014 (2)

- August 2014 (2)

- July 2014 (1)

- June 2014 (8)

- May 2014 (8)

- April 2014 (3)

- March 2014 (3)

- February 2014 (3)

- January 2014 (1)

- December 2013 (3)

- November 2013 (1)

- October 2013 (2)

- September 2013 (4)

- August 2013 (5)

- July 2013 (10)

- June 2013 (12)

- May 2013 (11)

- April 2013 (15)

- March 2013 (12)

- February 2013 (12)

- January 2013 (14)

- December 2012 (14)

- November 2012 (17)

- October 2012 (20)

- September 2012 (11)

- August 2012 (14)

- July 2012 (15)

- June 2012 (14)

- May 2012 (15)

- April 2012 (10)

- March 2012 (14)

- February 2012 (12)

- January 2012 (14)

- December 2011 (15)

- November 2011 (15)

- October 2011 (15)

- September 2011 (15)

- August 2011 (15)

- July 2011 (13)

- June 2011 (13)

- May 2011 (14)

- April 2011 (15)

- March 2011 (18)

- February 2011 (14)

- January 2011 (24)

- December 2010 (29)

- November 2010 (7)

Categories

Disclaimer

On this blog we discuss fictional scenarios; nothing on this blog is legal advice. No attorney-client relationship is created by reading the blog or writing comments, even if the authors write back. The authors speak only for themselves, and nothing on this blog is to be considered the opinions or views of the authors’ employers.

2 responses to “This Week in Law”